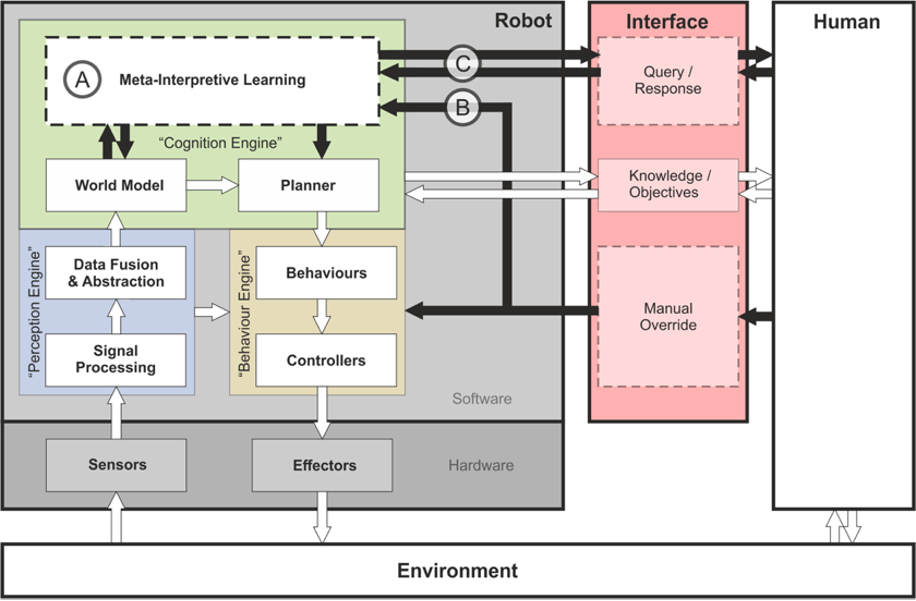

To improve the trustworthiness of autonomous decision-making for mobile robots by ensuring that they can recognise and resolve operational and legal ambiguities that arise commonly in the real world. This will be achieved through the paradigm of human-like computing (HLC) and using meta-interpretive learning (MIL).

The human-like decision-making will be encoded in a variety of ways:

A. By design from operational and legal experts in the form of initial logical rules (background knowledge)

B.Through passive learning of new logical representations and rules during intervention by human overrides when the robot is not behaving as expected; and

C.Through recognising ambiguities before they arise and active learning of rules to resolve them with human assistance.

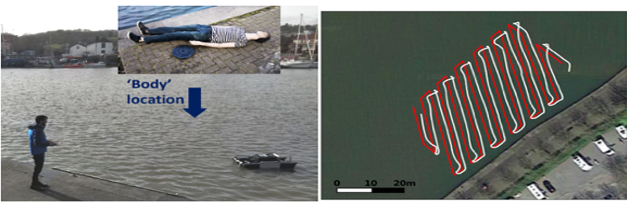

A general trustworthy robotic framework will be developed to incorporate the new approach. However, as a case study, we will be focusing on autonomous aquatic applications, e.g., an autonomous "robot boat" for underwater crime scene investigation and emergency response with Metropolitan Police.

"One-shot learning" traditionally refers to classifying a single instance using a machine learning model pre-trained on extensive datasets. In contrast, Meta-Interpretive Learning (MIL), a type of Inductive Logic Programming (ILP), can generate complex logic programs from just a single positive example and minimal background knowledge, without prior extensive training. This approach offers a human-centered form of machine learning that is more controllable, reliable, and comprehensible due to its small training data size and the inherent interpretability of logic programs. We use PyGol, a novel implementation of MIL based on Meta Inverse Entailment (MIE), and compare its performance with ...

Read Morewe present NumLog, an Inductive Logic Programming (ILP) system designed for feature range discovery. NumLog generates quantitative rules with clear confidence bounds to discover feature-range values from examples. Our approach focuses on generating rules with minimal complexity from numerical values, ensuring the assessment of methods that could impact accuracy and comprehensibility. Traditional ILP systems, especially those intersecting with computer vision, struggle with numerical data. This convergence presents unique challenges, often hindering the generation of meaningful insights due to the limited capabilities of conventional ILP systems to...

Read MoreDeep learning serves as a crucial component in computer vision, enabling accurate predictions from raw data. However, unlike human cognition, deep learning models are vulnerable to adversarial attacks. This paper introduces a new method for traffic sign recognition that employs Inductive Logic Programming (ILP) to generate logical rules from a limited set of examples. These rules are used to assess the logical consistency of predictions, which is then incorporated into the neural network through the loss function. The study investigates the effect of incorporating logical rules into deep learning models on the robustness of vision tasks in autonomous vehicles...

Read MoreTraffic sign detection is crucial for Autonomous Vehicles (AVs) to ensure the safety of navigation. Although Neural Networks (NN) are widely used for AV perception systems, they are susceptible to adversarial attacks due to their dependence on pixel-level features, which can be manipulated to deceive the system and cause misclassification of traffic signs. To address this issue, we propose a logic-based neuro-symbolic generalisation approach to detect traffic signs. The proposed methodology decomposes the sign detection task into sub-tasks focused on sign features like shape and text...

Read MoreFractals are geometric patterns with identical characteristics in each of their component parts. They are used to depict features which have recurring patterns at ever-smaller scales. This study offers a technique for learning from fractal images using Meta-Interpretative Learning (MIL). MIL has previously been employed for few-shot learning from geometrical shapes (e.g. regular polygons) and has exhibited significantly higher accuracy when compared to Convolutional Neural Networks (CNN). Our objective is to illustrate the application of MIL in learning from fractal images. We first generate a dataset of images of simple fractal and non-fractal geometries and then ...

Read MoreThe spatial configuration of sports teams, such as soccer matches, indicates their tactic. The specification of team tactics might be either offensive or defensive. We propose a method using Meta Interpretive Learning (MIL) to generate rules that are learned from a video to explain the strategy of a football game. We first track the players to estimate their position and estimate the team's formation. For the purpose of classifying players as defender, midfielder, or attacker, our method combines k-means and OPTIC clustering. We measure the dynamic strategy within the time series by generating background knowledge, then a new rule extracts to explain team strategy regarding the current state...

Read More